Succinct Challenge-Oriented heap exploit introduction. Here we talk about the heap on Linux.

Implementation

You can visit GNU ftp to check Linux glibc source code. This tells how heap works in details, but truly hard to read.

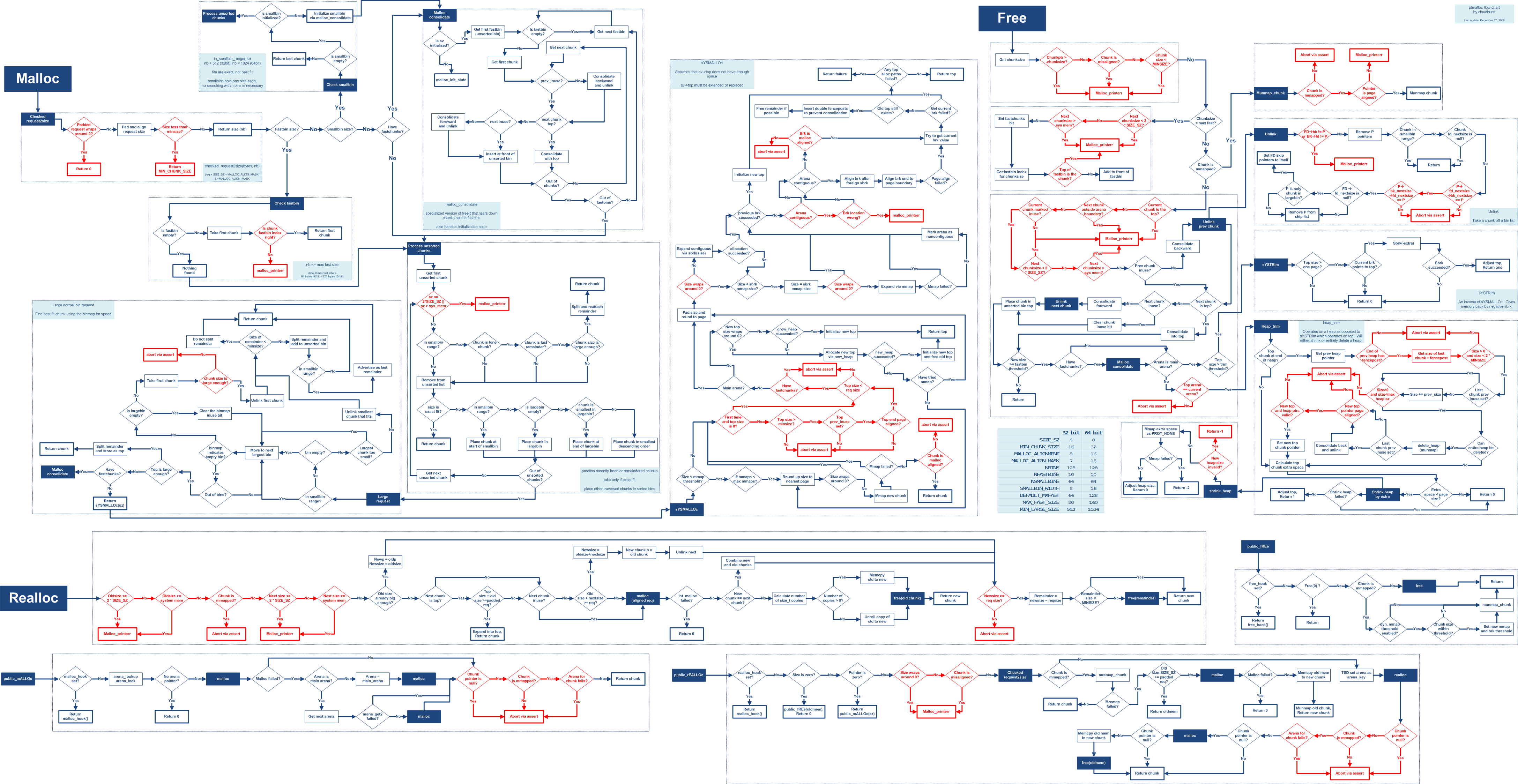

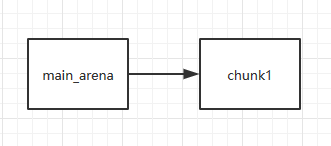

To understand Linux heap mechanism better, the following image gives us a general look, but it is an older version of glibc heap implementation.

you can also visit villoc to visualize the process of a program assigning memory when running (click here to see an example).

It’s better to visit other researchers’ update-to-date blog and read their analysis essays to learn heap.

Heap Overflow

It is similar to stack overflow since they both write too much things to mess up stack/heap.stack overflow usually overwrite ret addr of a function on the stack to control program flow; heap overflow can break/change next chunk structure, fake chunk for unlink, even overwrite things on stack (if chunk is alloced on stack).

Unlink

unlink is always used for changing where pointer points.

The unlink occurs only when you free a chunk, and another freed chunk (not in fastbin) is next to it. Then that freed chunk will be unlinked (that’s where unlink happens) from its original bin list, and merged with your newly freed chunk. Or when a largebin chunk is allocated the unlink also happens, but except checking fd and bk, the check of fd_nextsize and bk_nextsize is added

***Ancient unlink: ***

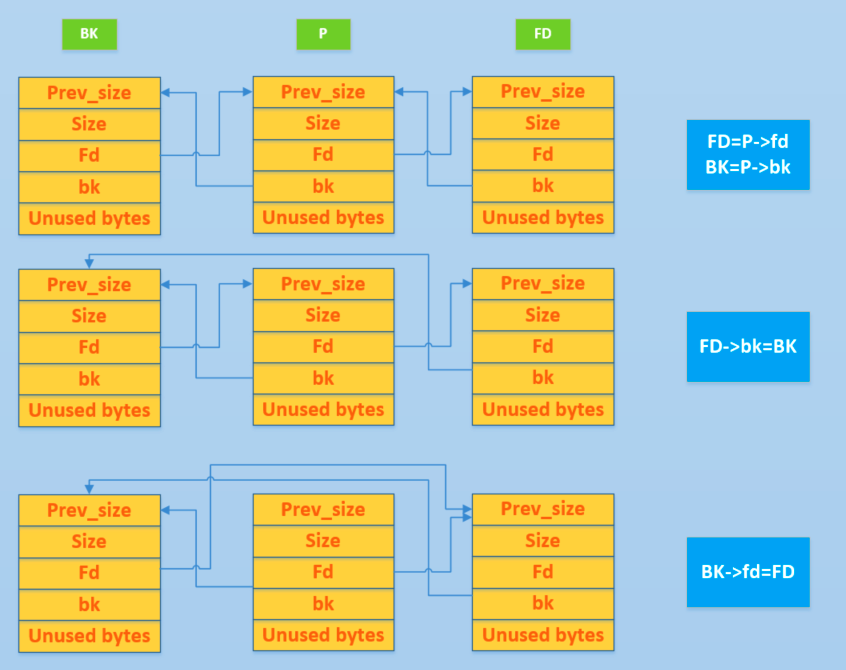

It just operates as the following image does.

Here we take 32-bit chunk as an example (every part in 32-bit takes 0x4 bytes):

The whole process of free chunk Q is:

glibcjudges this chunk to be small chunk- merge forward, find previous in use, so skip

- merge backward, find chunk

Nextchunkis freed - perform

unlinkforNextchunk

Without check for fd and bk, so we can set them any value. The process of unlink:

- FD =

Nextchunk->fd=target addr-12 - BK =

Nextchunk->bk=expect value - FD->

bk= BK, that is*(target addr-12+12) = BK = expect value - BK->

fd= FD, that is*(expect value +8) = FD = target addr-12

Finally we get *(target addr) = expect value (write anywhere anything), but also set *(expect value +8) = target addr-12 (sometimes needs to be bypassed).

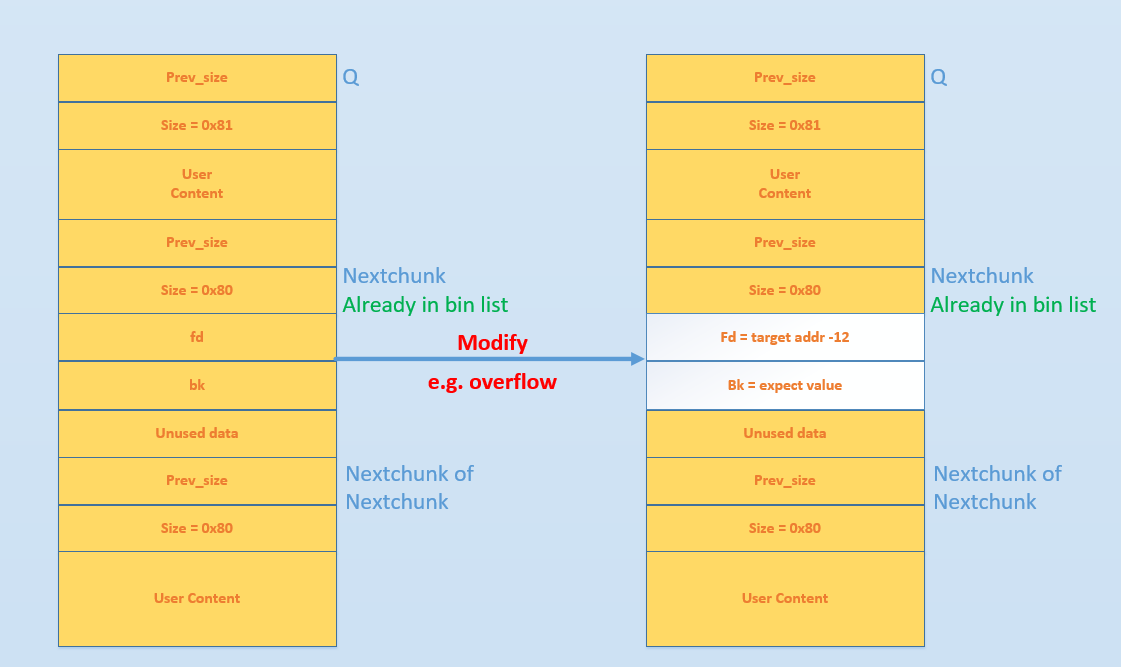

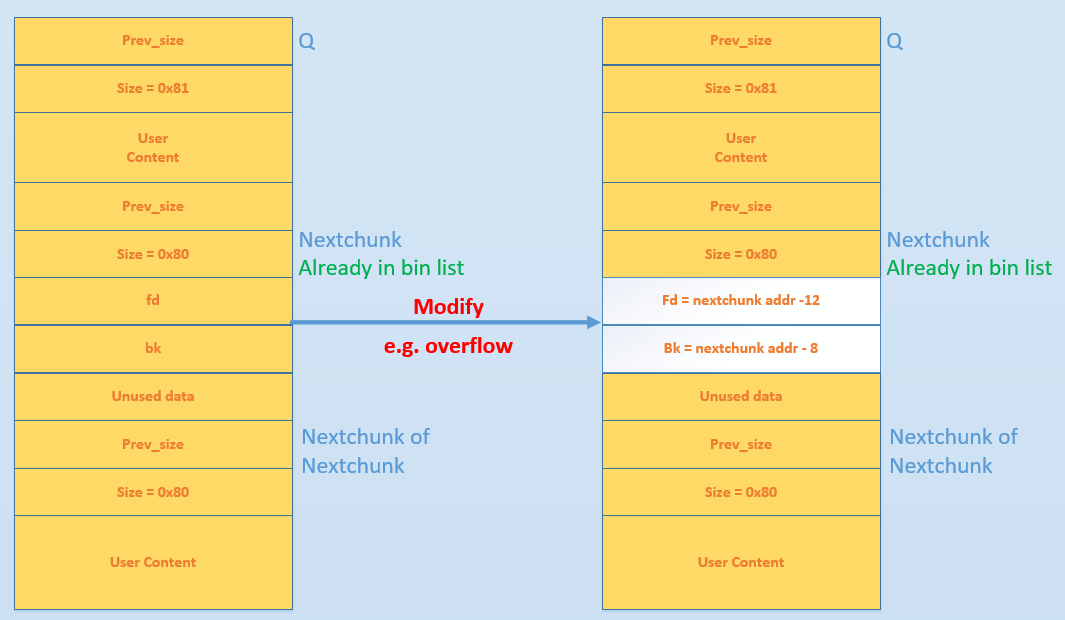

However more checks were added to unlink nowadays.

***Current unlink: ***

New checks here:

1 | // check size |

As we can see, FD->bk == P && BK->fd == P should be satisfied, so fd and bk cannot be set as above did.

To pass this check, we need:

- fakeFD ->

bk== P <=> *(fakeFD + 12) == P - fakeBK ->

fd== P <=> *(fakeBK + 8) == P

Then in unlink:

- fakeFD ->

bk= fakeBK <=> *(fakeFD + 12) = fakeBK - fakeBK ->

fd= fakeFD <=> *(fakeBK + 8) = fakeFD

That is:

- *P = P - 8

- *P = P - 12

So the result is *P = P - 12. Well, if we set fd(fakeFD) as nextchunk addr-12, and bk(fakeBK) as nextchunk addr-8, we will get: *nextchunk = nextchunk - 12.

This let nextchunk point to the place close to it. If we can write to it, we may even change nextchunk value to be GOT address and hijack GOT item.

Visit ADWorld PWN Challenge Area Write-ups (Unlink) for more challenges.

Chunk Extend/Overlapping

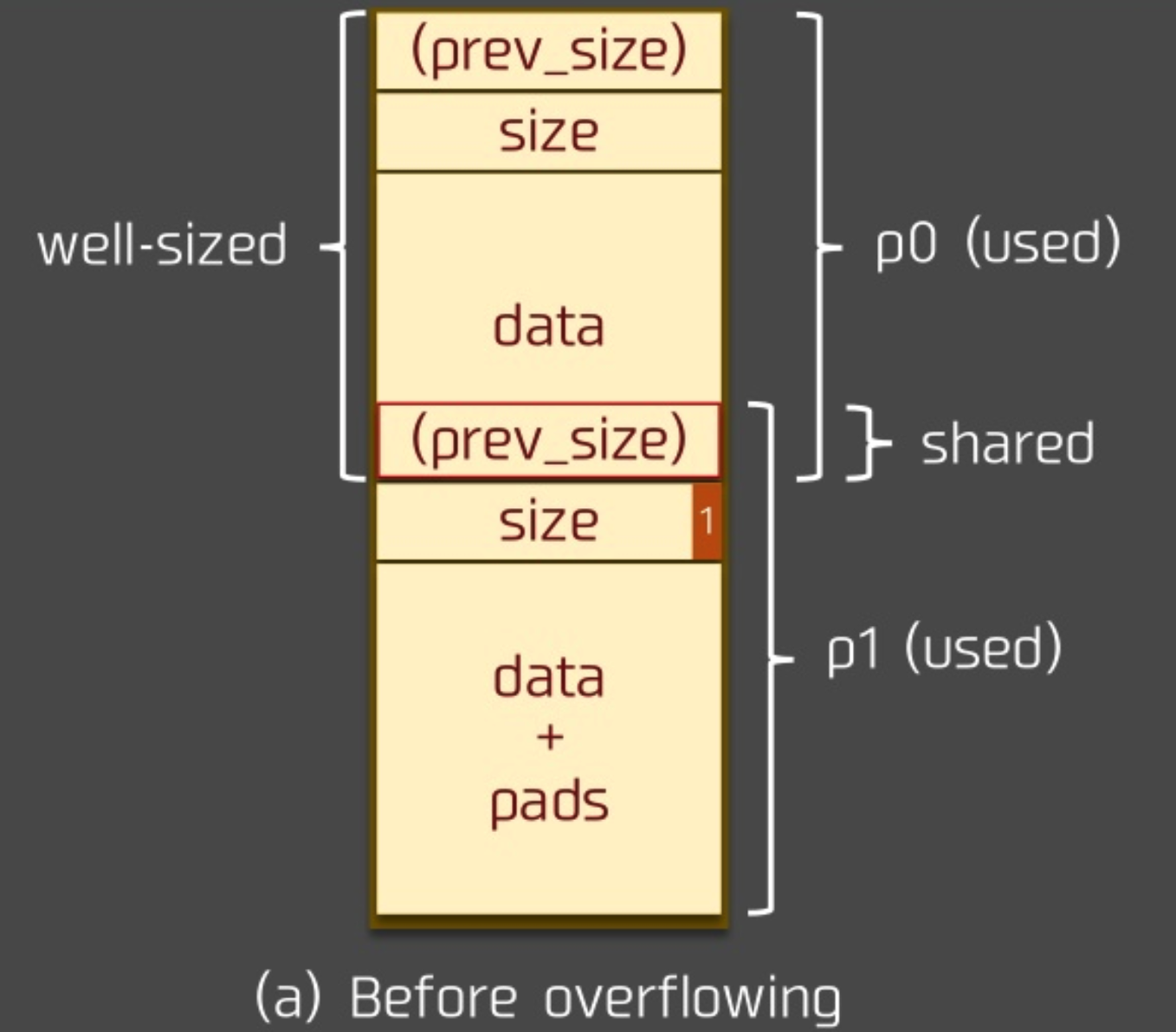

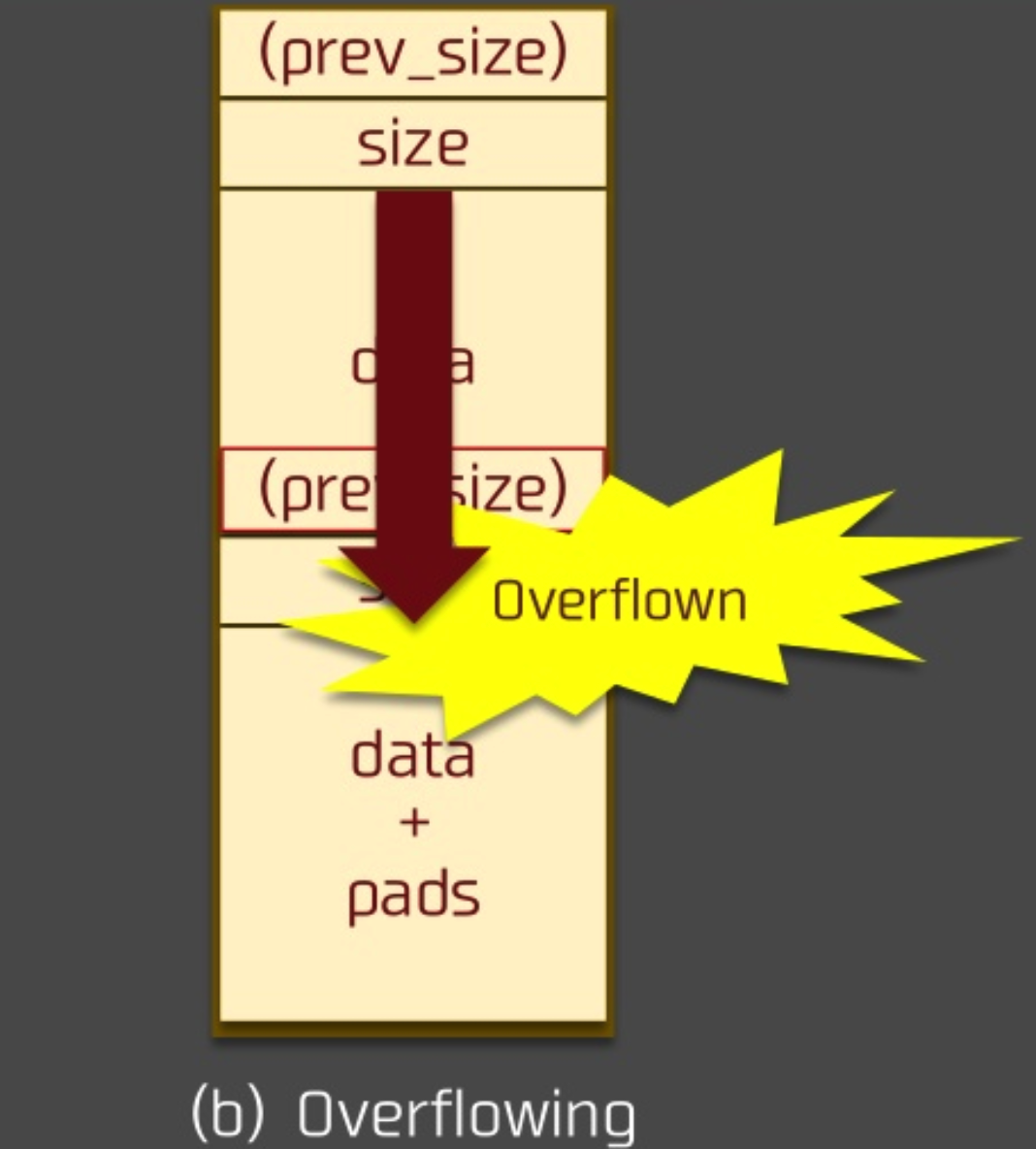

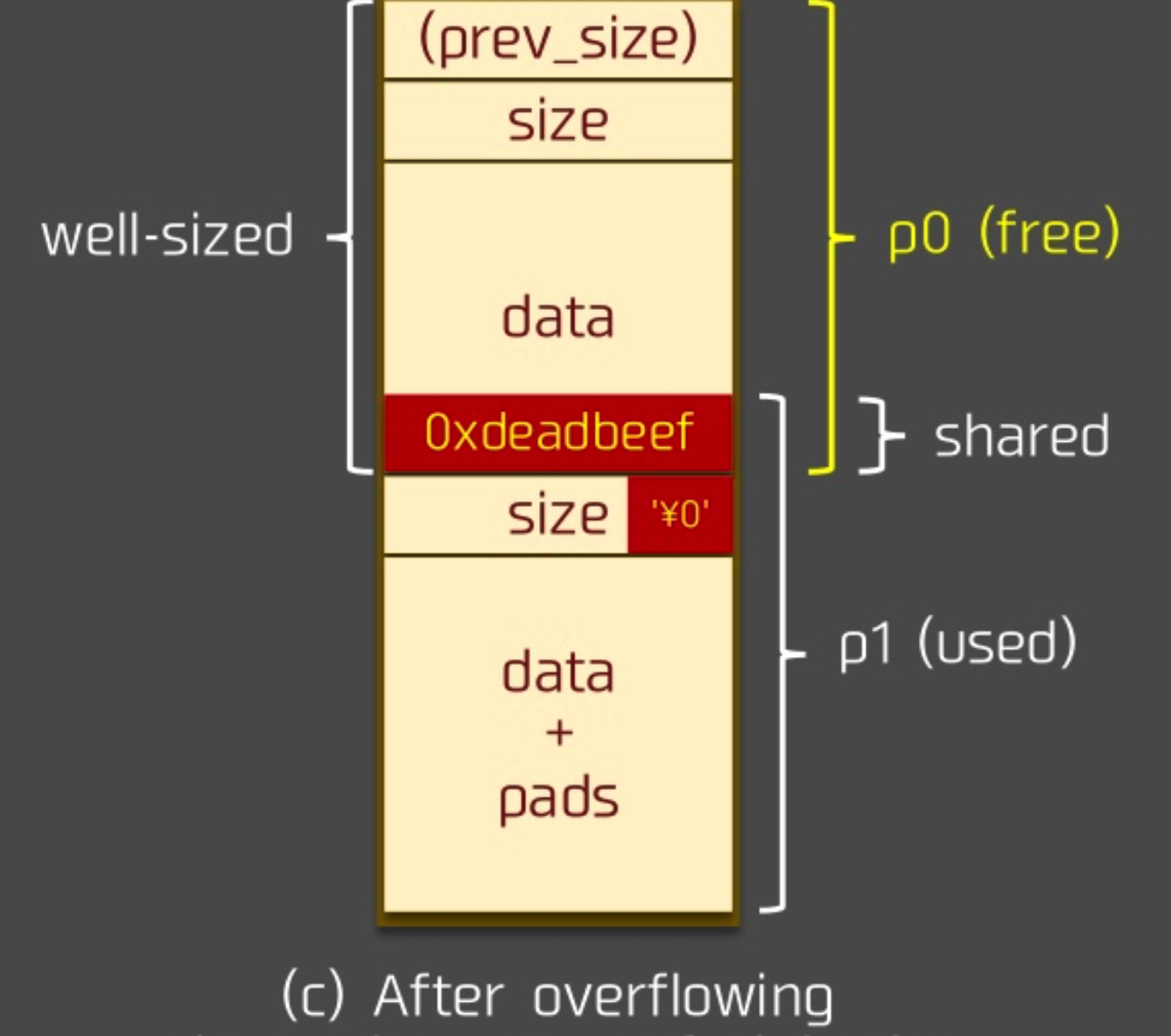

The ptmalloc uses chunk header to determine whether chunk is inuse, or locate the post/next chunk.

if we adjust (extend/shrink) a chunk’s size part, next we malloc/free it, the eighboring chunks will be effected (be included into the chunk we operates or sth).

Use After Free

The UAF vulnerability exist when you free something but not set that pointer as NULL, while when editing you dont check whether it is freed.

For example: if program prevents you from writing shellcode to A item, but you can write it to B item directly. So you can free A item first and re-assign it as B item, write shellcode to it, and still use it as A item for evil things.

Visit ADWorld PWN Challenge Area Write-ups (UAF Part) to see more challenges.

Fastbin Attack

Attacks around fastbin, includes:

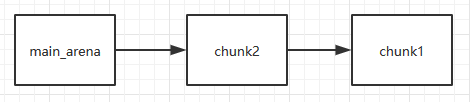

fastbindouble free

First free chunk1

Second free chunk2

Thirdly, free chunk1

Then we can make multiple pointers control the same chunk.

a technique in the Malloc Maleficarum. It is to fake one fastbin chunk at some place, which should also bypass some limits in free, to successfully allocate it.

Fake a chunk in fastbin size, find a way to free it into fastbin list, and take over the palce through allocating fastbin of that size.

Alloc to Stack

Changefdto points to our carefully constructed fakechunkonstack, then we fetch it to edit theret addr.Arbitrary Alloc

The target changes fromstackto anywhere.

Unsorted Bin Attack

Since it works differently from fastbin, it can only assign some big number (you will know later) to target address.

It fakes an unsorted bin chunk at the end of the bin list, and then allocate all the other real bins in the same list.

When there is only the fake bin in list, its fd points to the fd of unsortedbin in malloc_state struct, might be main_arena + 0x88. In glibc it must starts with 0x7f (can be regarded as the size of a fastbin). So it works for fastbin attack to find/make the target.

Large Bin Attack

It happens when the largebin double linked list inserting or removing chunks, and it leads to:

- Unexpected memory allocating: when requesting for a

largebin(malloc), fake thebk_nextsizeof thelargebinchunk first.- set

bk_nextsizeas the target address - set target’s fake

fdandbkas what we did inunlinkto bypassunsorted bin unlink. - set target’s fake

bk_nextsizeandfd_nextsizeas0to skiplarge bin unlinkoperation (it will dounsorted bin unlinkinstead). - the fake chunk starting at target address will be allocated.

- set

- Write

heapaddress anywhere: when inserting alargebinchunk (free), fake thebk_nextsizeandbk.- set

fwd->bk_nextsizeasevil_addr-0x20. After executingvictim->bk_nextsize = fwd->bk_nextsizethevictim->bk_nextsizeis also set asevil_addr-0x20. Thenvictim->bk_nextsize->fd_nextsize = victimwritesvictim addrtoevil_addr-0x20->fd_nextsize, that is writingvictim addrtoevil_addr.

- Related codes:

1

2

3victim->bk_nextsize = fwd->bk_nextsize;

...

victim->bk_nextsize->fd_nextsize = victim;- set

fwd->bkasevil_addr-0x10. Afterbck = fwd->bkit setsbckasevil_addr-0x10. Then thebck->fd = victimwritesvictim addrtoevil_addr-0x10->fd, that is writingvictim addrtoevil_addr.

- Related codes:

1

2

3bck = fwd->bk;

...

bck->fd = victim;- As for where to write, the

global_max_fastmight be a good place to write a big number to.

- set

House of Storm

We get two chances of writing heap address anywhere by faking bk_nextsize and bk of largebin chunk. Except global_max_fast another exploit is House of Storm. Combining with unsorted bin attack we can make it to be allocating any memory.

We have to meet some conditions:

- There is a chunk

Ainlargebin - And a chunk

Binunsorted bin - The size of

Bis greater than size ofA

When malloc a 0x48-0x58 chunk:

- If we set

A->bk_nextsizeasevil_addr-0x20+8-5, setA->bkasevil_addr-0x10+0x18and setB->bkasevil_addr - It first searches

unsorted binand sorts themsmall/large bin - Then triggers

unsorted bin attack,unsorted bin->bkpoints toevil_addr - Next, chunk

Bis inserted intolargebin. WhenPIEis enabled, theheapaddress always starts with0x56or0x55(fastbinchunk size), that size is suitable for ourmallocrequest. - As we write

heapaddress intoevil_addr+0x8-5(fake size) andevil_addr+0x18(fakebk), the fake chunk will be like (if we setevil_addras__free_hook):

1 | heap &__free_hook |

Since the size fits our malloc request well, we malloc the faked chunk starting at evil_addr.

So in the next big for loop (malloc loop) the faked unsorted bin will be removed/allocated.

As evil_addr->bk points to heap address, it bypasses limit of bck->fd = unsorted_chunks (av).

Tcache Attack

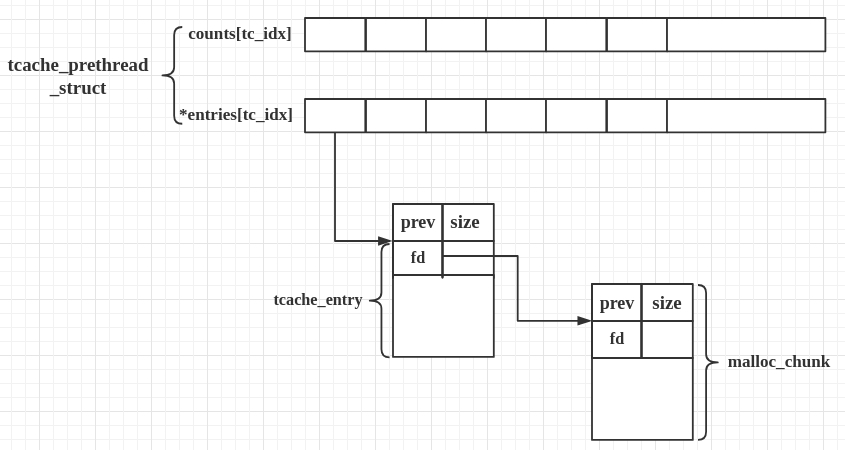

First have a look at the source of new structures:

tcache_entry

1 | /* We overlay this structure on the user-data portion of a chunk when the chunk is stored in the per-thread cache. */ |

- and

tcache_perthread_struct

1 | /* There is one of these for each thread, which contains the per-thread cache (hence "tcache_perthread_struct"). Keeping overall size low is mildly important. Note that COUNTS and ENTRIES are redundant (we could have just counted the linked list each time), this is for performance reasons. */ |

And the running mechanism is:

free: if size <smallbinsize- before

tcache:- put chunks into

fastbinorunsortedbin

- put chunks into

- after

tcache:- put into

tcache - but only 7 chunks by default, for

tcacheof same size - if the list is full, then put into

fastbinorunsortedbin

- put into

- before

malloc: if size intcachesize range- take from

tcacheif not empty - find if

fastbin/smallbin/unsorted binhave available chunks. - if they got, chunks will be moved from

fastbin/smallbin/unsorted bintotcacheuntil it’s full - then

mallocwill take chunk intcache

- take from

tcache poisoning

overwrite pointernext, without checks we canmalloceverywhere.tcache dup

since in libc 2.26 there is no checks for double free, so we can build cycliced list intcachelike what we do in oldfastbindouble freetcache perthread corruption

thetcache_perthread_structmanage the wholetcachestructure, if we find a way to control it, we can change theentriesand other related addressestcache house of spirit

usingtcacheto do house of spirit, fake a chunk on stack or sth to bypass some checks, link in intotcacheand we can alloc it.smallbin unlink

when puttingsmallbintotcache, there existsunlinkoperations with weak checkstcache stashing unlink attack

when usingcallocit won’t take chunks fromtcache, so there will be unlinkslibc leak

if we want to reproduceunsortedbin attack, we have to fullfill thetcache, then the chunks will be put intounsortedbinprotection enhancement in

glibc2.27

- in

tcache_entryadd ptrkey - set max num of

tcache - in

tcache_put(), letkeyof atcache_entrypoint totcache, for checking double free - in

tcache_get(), clean pointerkeywhen removingchunkfromtcache - in

int_free(), check whether the chunk to free is intcachelist, and will printfree(): double free detected in tcache 2 - in

_int_realloc(), usememcpy()instead copy bit by bit, more concise - in

do_set_tcache_count(), limittcache_countto be smaller thanMAX_TCACHE_COUNT(127)

Visit ADWorld PWN Challenge Area Write-ups (Tcache) for some challenges.

House of Einherjar

I can force malloc chunk at almost anywhere, by exploiting consolidate backward in free.

The key part of the code in free:

1 | /* consolidate backward */ |

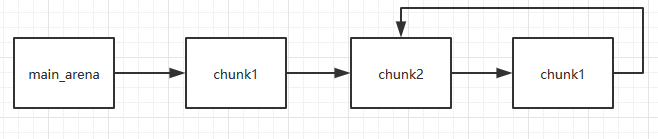

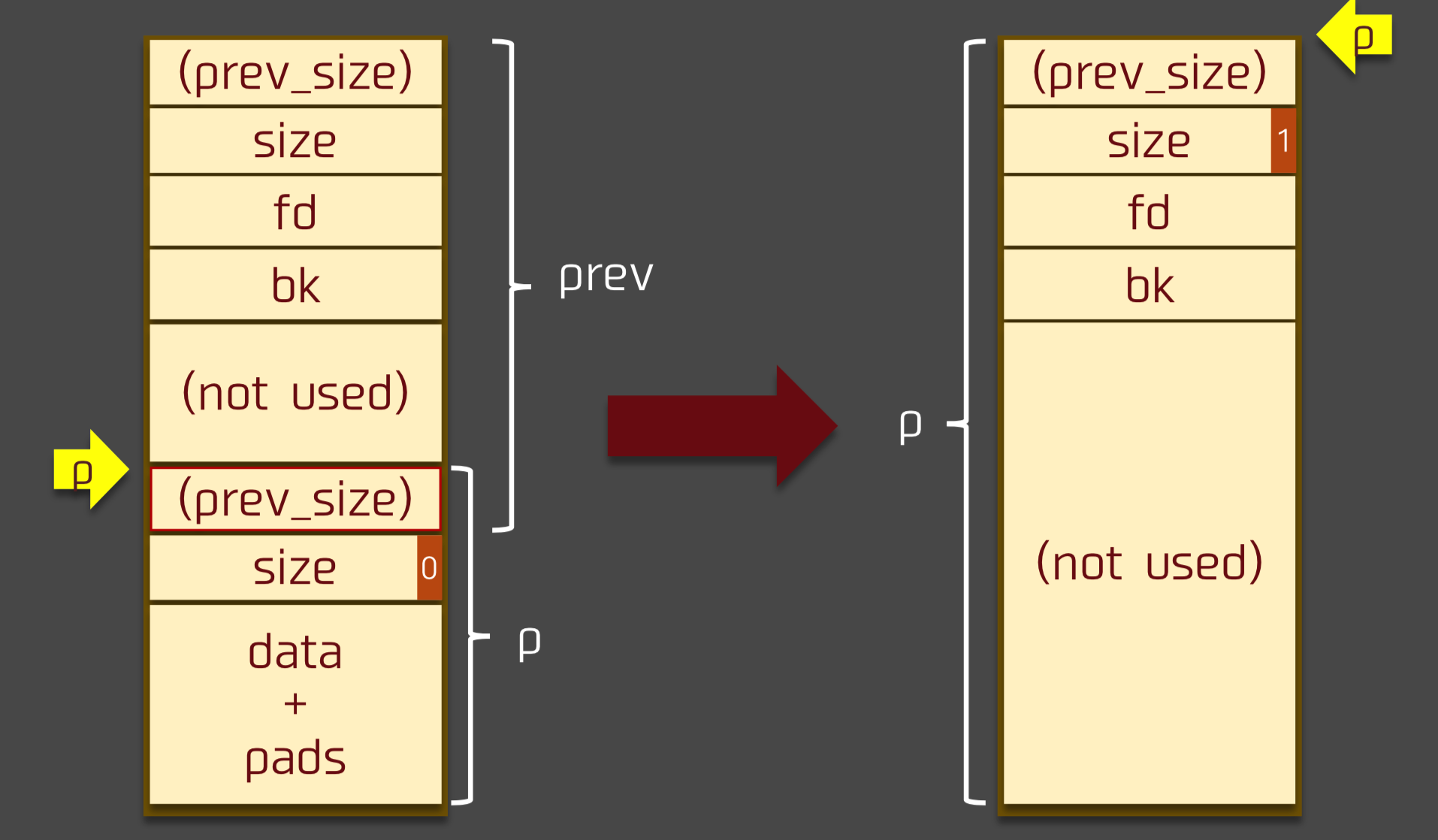

And the effect is like:

So the p we finally get is based on the original p and its prevsize, also with some security checks.

Before the overflow:

If we can overflow (fake) the prev_size and the PREV_INUSE (off-by-one), then:

After the overflow, we get new chunk: chunk_at_offset(p1, -((long) prevsize))

But there are constraints like unlink has, so we have to fake the structure at the corresponding chunk.

House of Force

Control top chunk’s size and lift/lower top chunk address to control GOT items (can be used in VM escaping).

House of Lore

Fake a small bin by changing fd and bk (bypass malloc check)

House of Orange

It aims to exploit vulns to gain free ability, in a program without the function to free.

It mainly depends on the process when top chunk cannot meet the new malloc request. The old top chunk will be freed to unsorted bin, therefore we can obtain unsorted bin without free function.

Normally we carefully constructed the fake size of top chunk, and malloc a bigger chunk to link this top chunk into unsorted bin; next we are able to allocate chunk cutted from unsorted bin. Later exploitation part is related to IO_FILE. (More at ADWorld PWN Challenge Area Write-ups (IO_FILE related))

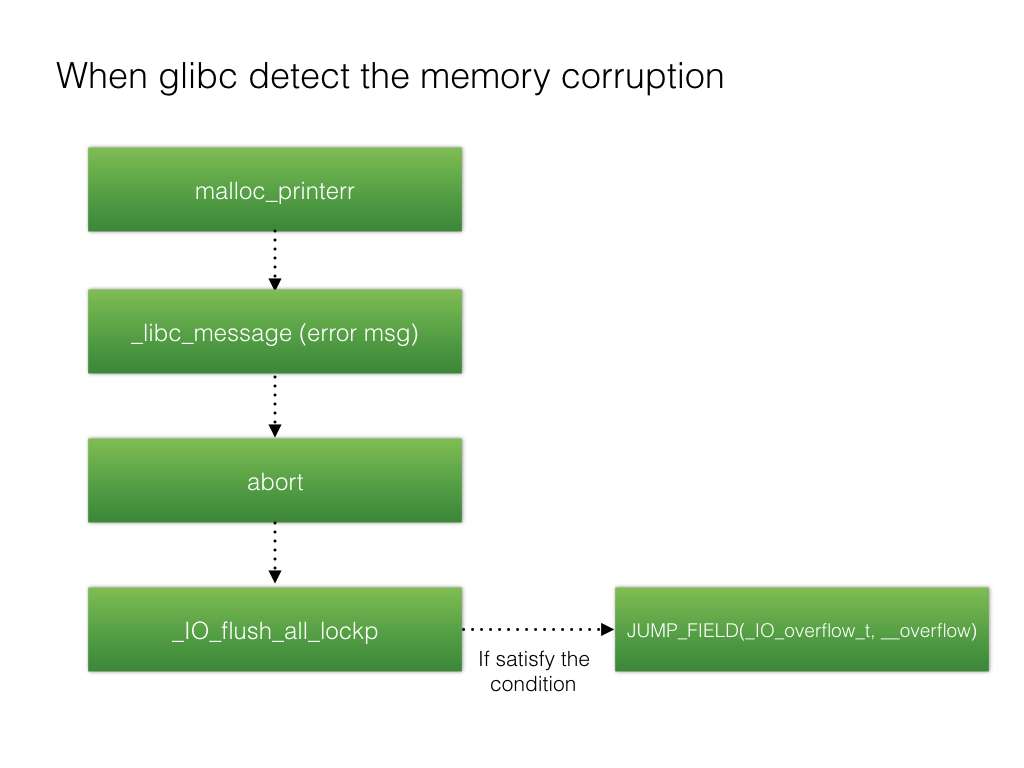

Before glibc 2.23 the exploit is: modify stdout (e.g. unsortedbin attack to modify _IO_list_all, then using unlink of unsortedbin to put chunk into smallbin, which is the corresponding _IO_list_all->_chain linking fake _IO_FILE) and trigger abort of libc, use _IO_flush_all_lockp in abort to control program flow.

Whole calling process:__libc_malloc => malloc_printerr => __libc_message => abort => _IO_flush_all_lockp

After glibc 2.23 (like glibc 2.24) adds _IO_vtable_check which limits vtable address to be between __stop___libc_IO_vtables and __start___libc_IO_vtables. So we use _IO_str_jumps->__finish.

After glibc 2.27 the flush of stream is discarded.

As for House of Orange by thread, when the size of thread_arena.top is not enough it will call sysmalloc to extend heap, and if original heap is larger than 0x4000000 the sysmalloc will free top chunk and re-alloc in low address.

House of Rabbit

Though it has a check for size when fetching bin in fastbin, there is no check when malloc consolidate works.

Therefore we can fake either fd or size part of the fastbin, and trigger malloc consolidate by merging the top chunk or malloc some big chunk.

After that we will find our forged overlapped chunk, or the chunk at our target address, in the unsorted bin, waiting to be allocated

House of Roman

A trick combining fastbin attack with unsorted bin attack.

By modifying the size of a chunk which belongs to unsorted bin before to be a fastbin chunk, we can get the main_arena + 0x88 remains in the fd of the fastbin. Then we can malloc the area around main_arena + 0x88 like malloc_hook, and use unsorted bin attack to write a main_arena + 0x88 (libc related address) to malloc_hook. By modifying the last 2 bytes, we can let malloc_hook points to one gadget (1/16 chance)

House of Spirit

It is to fake chunk by modifying just a little bit, get it freed to fastbin, and then allocate it to control a relatively big area.

House of Banana

After glibc 2.28 the _int_malloc() adds check for bk of unsortedbin to Invalidate unsortedbin attack. Then attackers consider largebin attack and using House of Storm to alloc anywhere.

However after glibc 2.29 the House of Storm is invalid, and after glibc 2.30 normal largebin attack also fails in some aspects (can only write unsortedbin address to anywhere in glibc 2.32, instead of writing any 2 heap addresses to 2 places).

The House of Banana starts from ptr _rtld_global in ld.so which pointing to struct rtld_global. There are many _dl_ns structs which store ELF segments symbol structs, include fini_array which is used in _dl_fini().

So what House of Banana do is to fake a rtld_global struct, and using largebin attack to write heap addr into rtld_global. When the program exit, it will execute faked fini_array.

House of Kiwi

Sometimes we encounter strict limits like sandbox restrictions, it gives us a special way to control the program.

First we should be able to trigger __malloc_assert() (heap overflow), second we should be able to write anywhere, edit _IO_file_sync, IO_helper_jumps + 0xA0 and IO_helper_jumps + 0xA8.

In __malloc_assert() has a flush(stderr) which calls _IO_file_jumps -> sync and there’re a few ways to trigger the assert.

By changing _IO_file_sync at _IO_file_jumps + 0x60 as setcontext + 61 and modifing IO_helper_jumps + 0xA0 and 0xA8 as ROP addr and ret gadget addr can perform stack pivoting.

House of Husk

https://ptr-yudai.hatenablog.com/entry/2020/04/02/111507